Author: Elton Stoneman

Containers are everywhere. You’ll often see Docker described as the runtime for modern apps using microservice architectures, and while it is great for that, it’s a pretty narrow definition. Containers actually have a much wider range, and you can use them as the centerpoint of your infrastructure, becoming the runtime for all of your applications, new and old.

Container 101

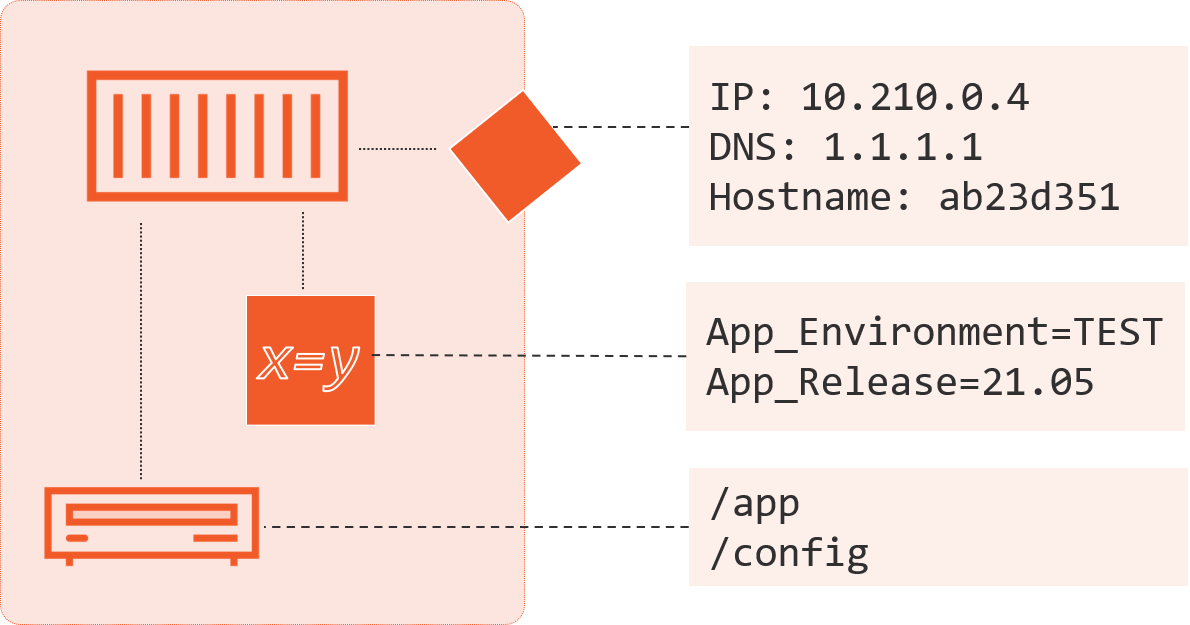

There are two big concepts in Docker: images and containers. Images are the package that stores everything your app needs to run: The application engine, third-party libraries, your own code and configuration. The image is a portable unit which you can share, and anyone who has access to the image can run your app in a container. Containers are virtualized environments. When you run a container, Docker creates a lightweight boundary around the application process which is set up in the image:

You can see here that Docker builds the compute environment, including environment variables which you can use for configuration, but it’s all transparent to the application process which thinks it has its own dedicated machine to run on. Containers have a virtualized file system (which is populated from the image and other sources) and virtualized networking, making it easy to run distributed systems in Docker where each component is running in a container.

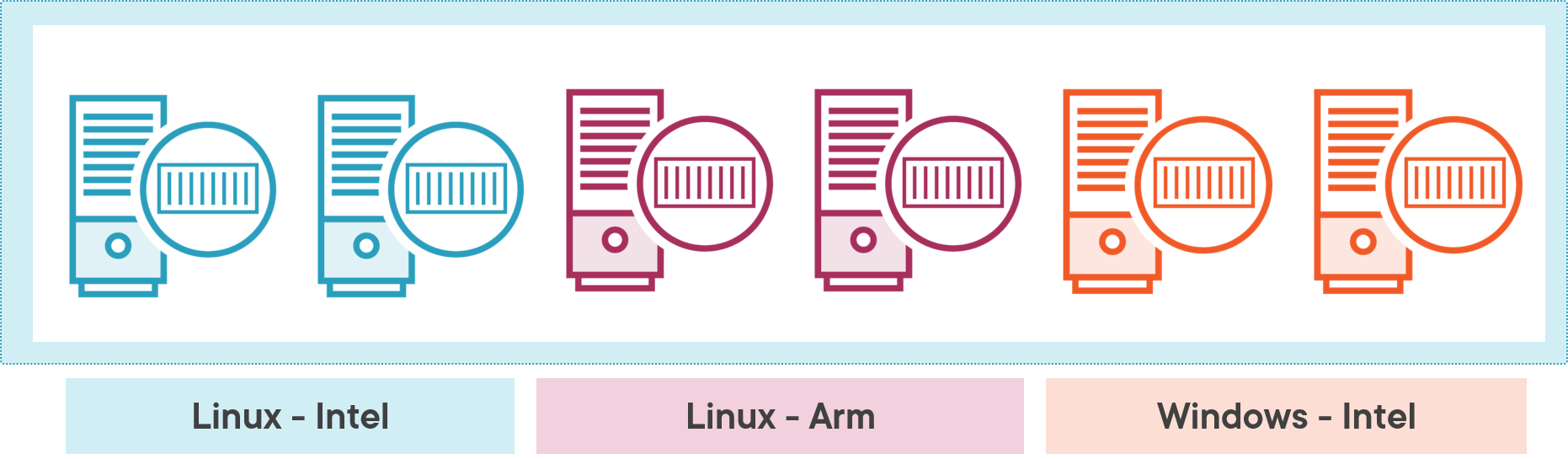

Docker gives you a packaging format and a runtime which are both generic. You can build any kind of app into an image and run it in a container, from brand new Go microservices to 15-year old .NET monoliths. Docker is also cross-platform, so you can run containers on Windows and Linux, on Intel and Arm CPUs.

Official images and the secure supply chain

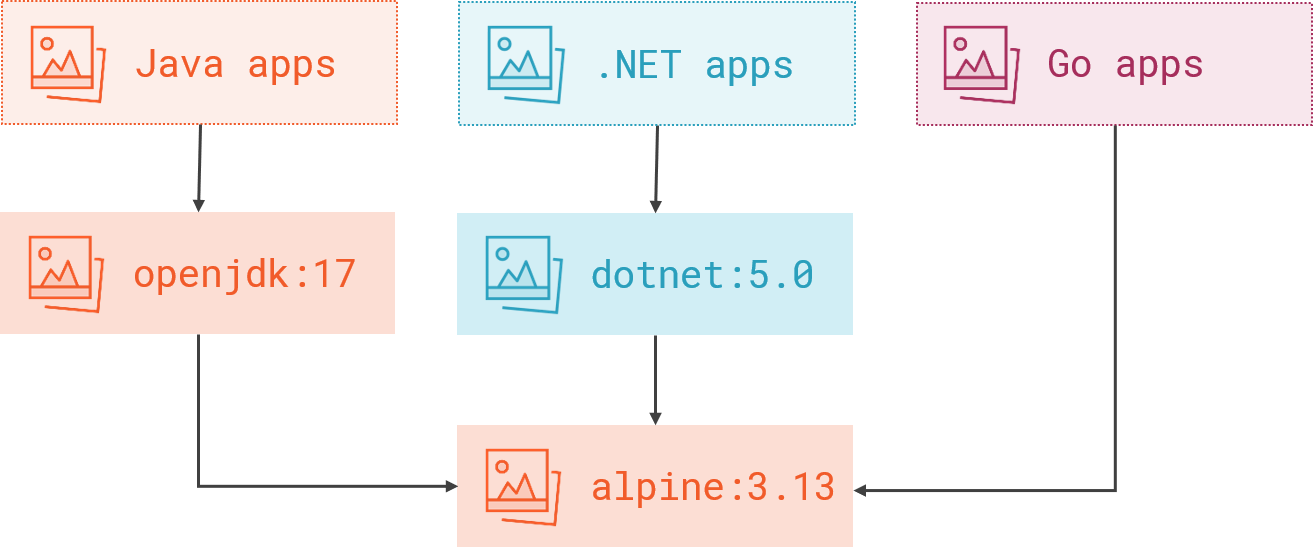

Container images are built on top of other images, and there are millions of existing images you can use as the base image for your own applications. That’s a huge boost in productivity and security. If you’re running Java apps, you can build them on top of a standard OpenJDK image. That’s an official image, which means the content is curated and approved by the Docker team, usually in conjunction with the team who actually built the product.

Official images give you a best-practice deployment to start from, together with a simple update procedure. The OpenJDK image has multiple variants for different versions of Java and different Linux distributions. You can use a specific version as the base for your application image with a simple Dockerfile, which is the script you use to package apps to run in containers:

FROM openjdk:17-jdk-alpine3.13

COPY my-app.jar /app

CMD java -jar /app/my-app.jar

Even if that’s the first Dockerfile you’ve seen, you can probably guess what it’s doing. It’s really like a deployment document which says:

- Start from the OpenJDK image for Java 17, running on Alpine Linux

- Copy in an existing application JAR file into the image

- And tell Docker the command to use when you run a container from the image, which is to start the Java app.

You create your application image with a docker build command, and Docker will download the OpenJDK image and package your content on top of it. That base image will be regularly updated on Docker Hub whenever there are patches to Java 17, or the Alpine Linux distribution. That gives you a consistent update procedure—whether it’s a security fix to the platform or OS, a new library version or new code, it’s just another docker build command.

Docker images are layered too, so you can define a core set of base images and use controls in your build and deployment pipelines to ensure all your apps use one of those base images:

Java is just one example. There are official images for .NET Framework and .NET Core 5 and 6, together with Python, Go, Node.js, Ruby, PHP, Perl, Groovy, Erlang, Swift, Haskell and plenty more. You can find them all listed in the official programming languages images on Docker Hub. And it’s not just your own apps—you can run infrastructure components like message queues and databases in containers using official images too, leveraging trusted packages from a secure delivery.

Preparing Docker Apps for Production on Pluralsight shows you how to make your Docker images production-grade.

Consistent dev and ops experience

The Dockerfile syntax is simple but powerful. You can run Bash or PowerShell scripts as part of the Docker build, so you can do pretty much anything you need to (including compiling your apps from source using multi-stage builds, so you can say goodbye to your build server). Whatever happens inside your Dockerfile, you’ll use the same docker build command to package it, and whatever technology stack you’re using you’ll run your apps in containers with docker run commands.

Moving to containers brings a layer of consistency to all your apps. The Dockerfile lives in source control along with the application source, and if you go all-in, then the only dependency you need in order to build and run your apps is Docker. New developers can join the team, clone the repo and have the app running with just docker build and docker run commands. Distributed systems are just as simple. You can model them with Docker Compose and have a multi-container app built from source and running with a single docker compose up command.

You get a layer of consistency across all your apps, but also across all your environments. Developers can get the whole stack running in containers on their laptop using the exact same versions of all the dependencies that you’ll use in production, because those dependencies are explicitly specified in the Dockerfiles. Eliminating differences between environments and configuration steps removes a whole class of hard-to-find bugs.

The ops team will also use the same tools to deploy and manage containers in the test and production environments. Every app can be packaged and run with simple Docker commands, which makes it easy to put together build and deployment pipelines, which can run anywhere. You can use Docker with existing automation solutions like Jenkins, and managed services like GitHub actions, which supports Docker and Compose out of the box.

Using Declarative Jenkins Pipelines on Pluralsight shows you how containerized builds work.

But Docker for legacy apps? Really?

Yes. In my consulting work, I’ve helped clients migrate 15-year old Fortran software running as desktop Windows apps into containers—and migrate them to Kubernetes on Azure. This is one of the great things about Docker: It supports so many technologies that you can move your whole application landscape to containers. In other words, you can run everything on a single platform–from legacy monoliths to modern microservices.

I work a lot with .NET applications, and Microsoft has supported Docker as a runtime for .NET since the Windows Server 2016 release. If you have old .NET Framework apps you can package them into Docker images using a base image provided by Microsoft. Here’s a simple example of what an old ASP.NET 3.5 web app looks like in a Dockerfile:

FROM mcr.microsoft.com/dotnet/framework/aspnet:3.5-windowsservercore-ltsc2019

COPY my-app.zip .

SHELL ["powershell"]

RUN Expand-Archive -Path my-app.zip -DestinationPath /inetpub/wwwroot

This is a Windows application, so it needs to run in Docker on a Windows machine, but the Dockerfile syntax and commands are the same. This example starts from the ASP.NET 3.5 image based on Windows Server 2019, which is the official image from Microsoft so it has a regular update cycle. It copies in an existing ZIP file with the app contents, switches to use PowerShell, then expands the ZIP file to the location the web server uses for content.

Maybe that app needs to stay on ASP.NET 3.5 because it’s not worth the investment to move to .NET 5 or 6. That’s fine. You can still build and deploy that component with Docker commands. You’ll just need to have a production environment with the capacity to run Windows containers.

Other components might be straightforward to update. In those cases you can use a newer version of .NET with a base image which is cross-platform:

FROM mcr.microsoft.com/dotnet/aspnet:5.0

COPY build/ /app

ENTRYPOINT ["dotnet", "/app/api.dll"]

That image can be built to run in Linux on an efficient Arm CPU, which is becoming a low-cost option on many clouds. You can deploy a production platform with hybrid OS and CPU support and run all your components in containers:

Yes, for real! Legacy Windows apps run on the Windows servers, Linux apps which need maximum CPU power run on the Intel servers, and Linux apps that need the scale but not the compute to run on the Arm servers. All in containers with the same build, deploy and management process.

The path to the cloud—any cloud

It takes some investment to migrate your applications to containers, but it’s a well-established path and you’ll find lots of guidance in the Pluralsight library to help you.

Docker may feel like a new technology, but it’s matured at an incredible pace since the first release in 2014. The Cloud Native Computing Foundation (the open source foundation behind Docker’s container runtime and Kubernetes) found in their latest survey that the use of containers in production has increased 300 percent since 2016.

All the major clouds provide a managed container service, where you can spin up containers from Docker images without worrying about any servers. Every cloud also has a managed Kubernetes service. Kubernetes is a platform for running containers, and it’s pretty much the industry standard for production deployments. Kubernetes on Azure is the same as Kubernetes on AWS and Kubernetes on GCP. Once you have your apps packaged into Docker images and modelled with the Kubernetes API, you can deploy them to any cloud—or in the datacenter.

For all these reasons, containers have become a very attractive proposition for lots of organizations. The ability to centralize on one technology platform to run all of your applications—with a consistent dev and ops experience for them all—is one of the driving forces behind the huge adoption of Docker and Kubernetes.