Author: Elton Stoneman

There are so many options for running Docker containers in the cloud that it’s easy to get lost in the choice. There are at least five to choose from on Azure alone. For most use cases, you can narrow it down to two: a managed container service (in which you run containers on-demand without having to deal with servers or orchestration) and Kubernetes. It looks like a choice between portability and simplicity, but the reality is more nuanced.

Kubernetes 101

Kubernetes is the industry standard for running containers in production, and all the clouds provide a managed Kubernetes service. If you’re new to Kubernetes then there are two main concepts to understand: The cluster and the API. A cluster is a collection of servers which all have a container runtime installed. Kubernetes joins them together so you can treat them as a single pool of compute for running apps in containers. The API is the modeling language you use to describe your applications, specifying all the components and how they plug together.

Cluster management is hard. To build a reliable and scalable production cluster you need a solid understanding of networking and storage, securing your operating systems and container runtimes, and infrastructure design for high availability. That’s before you even get to configuring and deploying Kubernetes itself, and then you have the ongoing maintenance of a complex system with a release cycle that means you need to update at least twice a year.

Managed Kubernetes services simplify all of those steps, with straightforward deployments and upgrades, instantly scalable clusters, and integrations with other cloud services like load balancers and storage. You can create a Kubernetes cluster in Azure with a managed control plane (the part that runs core Kubernetes components) and dozens of servers to run your apps with a single az command:

az aks create `

-g resource-group-1 `

-n aks-cluster-1 `

--node-count 36

That leaves you with just the Kubernetes API to master. That too is complex. The API has abstractions for modeling compute, networking, configuration and storage. It even has a simple app that will run to hundreds of lines across multiple YAML files. The application model is completely portable, and you can use it to run your app in Kubernetes on AWS or Azure, or even on a local machine with Docker Desktop.

Managed container services

Managed Kubernetes services let you forget about the servers in the cluster, until something goes wrong and you realize you own the VMs as well as the containers they run on. You’ll need monitoring in place to make sure the cluster is healthy. It’s also important to incorporate capacity planning to make sure you get maximum use of your servers, while leaving some headroom to scale up.

All that, along with having to learn a complex API, can seem like overkill if you just have a straightforward app you want to run in a few containers. That’s where the managed container services come in. Azure Container Instances and AWS Fargate let you bring your own Docker image, configure the environment settings and get a container up and running. You pay for the compute while your containers are up, but you don’t need to manage the servers they run on. You won’t even see the servers.

My fellow Pluralsight author and Docker Captain Julie Lerman wrote a great blog post which covers Fargate: Deploying .NET apps to containers on AWS. So here I’ll show you how a similar deployment looks using Azure Container Instances.

It’s just a single command to deploy a container to an existing Azure Resource Group:

az container create -n pi -g pi-rg `

--image sixeyed/pi:20.05 `

--command-line 'dotnet Pi.Web.dll -m web' `

--dns-name-label pi-demo `

--ports 80

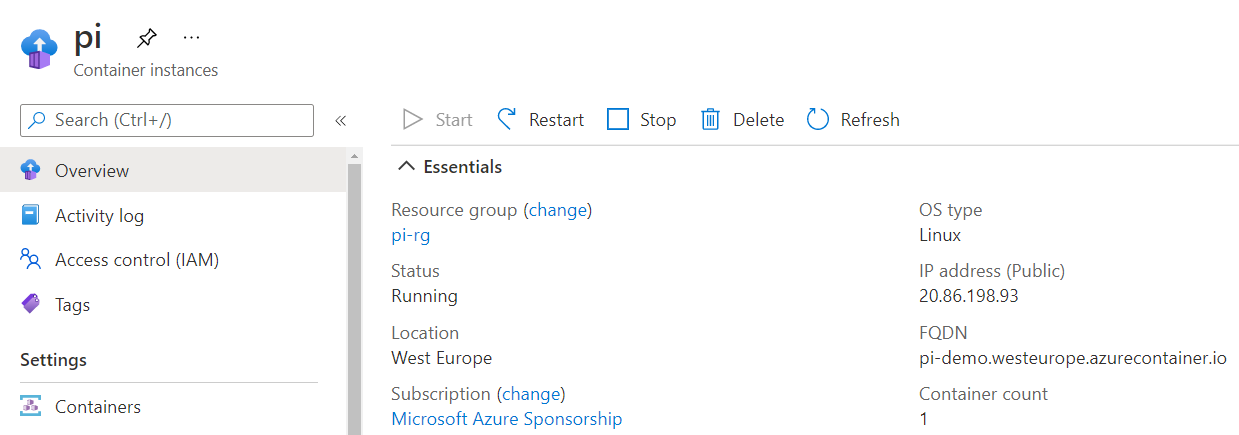

That’s it. The image is a public one on Docker Hub, and when the container is created ACI will pull the image and start the container, passing the startup command I set and publishing port 80. You get full insight and management of ACI containers in the Azure Portal as well as through the command line:

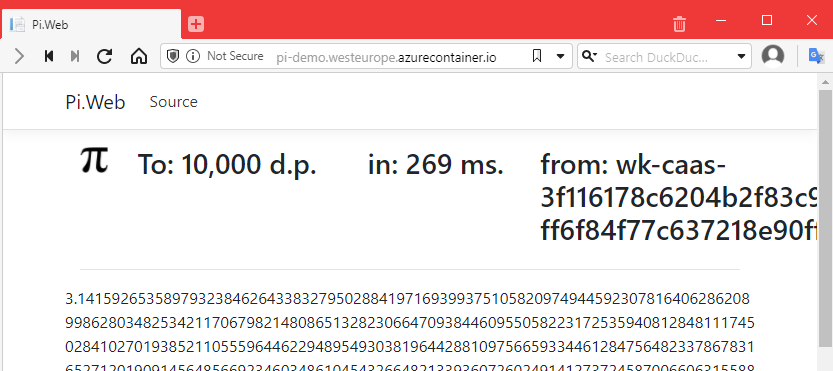

This container has a public IP address and a public DNS address—pi-demo.westeurope.azurecontainer.io—which is built from the label in my az command. I could set up my own DNS records with an A address or a CNAME, or I can browse to my app directly with the Azure address:

There’s a lot more to ACI than this. You can configure the compute resources you want, specifying CPU cores, memory and even GPUs. ACI supports Windows containers, and you can set the OS you need to match the container image you’re running. Multiple containers can run in one ACI group, and they’re connected so you can run a distributed system across multiple ACI containers. You can find all the options in the docs for the az container create command.

Containers-on-demand services like ACI and Fargate are so simple to use it’s easy to forget: these are production-grade container runtimes. You get the core features you need from a container platform, including configuration and storage options, without the overhead of Kubernetes. The big problem is that your app definitions aren’t portable; each cloud has its own way of modeling applications for their container service.

That’s changing with the evolution of Docker Compose.

Modeling apps with Docker Compose

Docker Compose has been around for a long time. It’s a modeling language to describe distributed apps running in containers, but it’s much simpler than the Kubernetes API. Originally Docker Compose was meant for running many containers on a single Docker server, which is one reason why it’s simple to use—you miss out on most of the features in a more complex platform like Kubernetes.

Running containers on a Docker server or laptop is still the main use case for Docker Compose, but it’s becoming a generic format for modeling applications that can run on different platforms. In the latest releases of the Docker command line, you can deploy containers directly to ACI or AWS Fargate using the Docker Compose format.

Here’s how I would model my Pi web calculator in Compose YAML:

services:

pi:

image: sixeyed/pi:20.05

entrypoint: ["dotnet", "Pi.Web.dll", "-m", "web"]

ports:

- 80:80

And I can run that locally with Docker Desktop, or on a server running Docker Engine with one command:

docker compose up -d

If you’re already familiar with Compose, you should notice two things: First, the Compose spec doesn’t include a version number, as it’s no longer needed. Second, the Compose command is built right into the standard Docker command line now, so you don’t need an extra tool. This is docker compose not docker-compose.

The new Compose command in the Docker CLI also supports integration with ACI and Fargate. You can create a Docker Context to connect your local command line to a cloud container service, then run standard Docker commands to deploy and manage containers in the cloud.

Deploying Containerized Applications on Pluralsight walks you through all the details of this.

Using an ACI context, you can deploy the exact same Docker Compose spec to Azure, using the exact same command line. This is new functionality from a partnership between Docker and the cloud providers, so it’s early days but very promising. The Docker Compose spec is expanding so it can include provider-specific features, without compromising the simplicity of the model.

Simplicity vs. ubiquity

So if you can model your apps simply using Docker Compose, why has Kubernetes, with all its complexity, become the dominant container platform? The complexity wasn’t just put there for fun. It reflects the huge feature set, flexibility and growth potential that Kubernetes gives you. If you’re serious about moving all your apps to containers, then Kubernetes can be your platform for the long term.

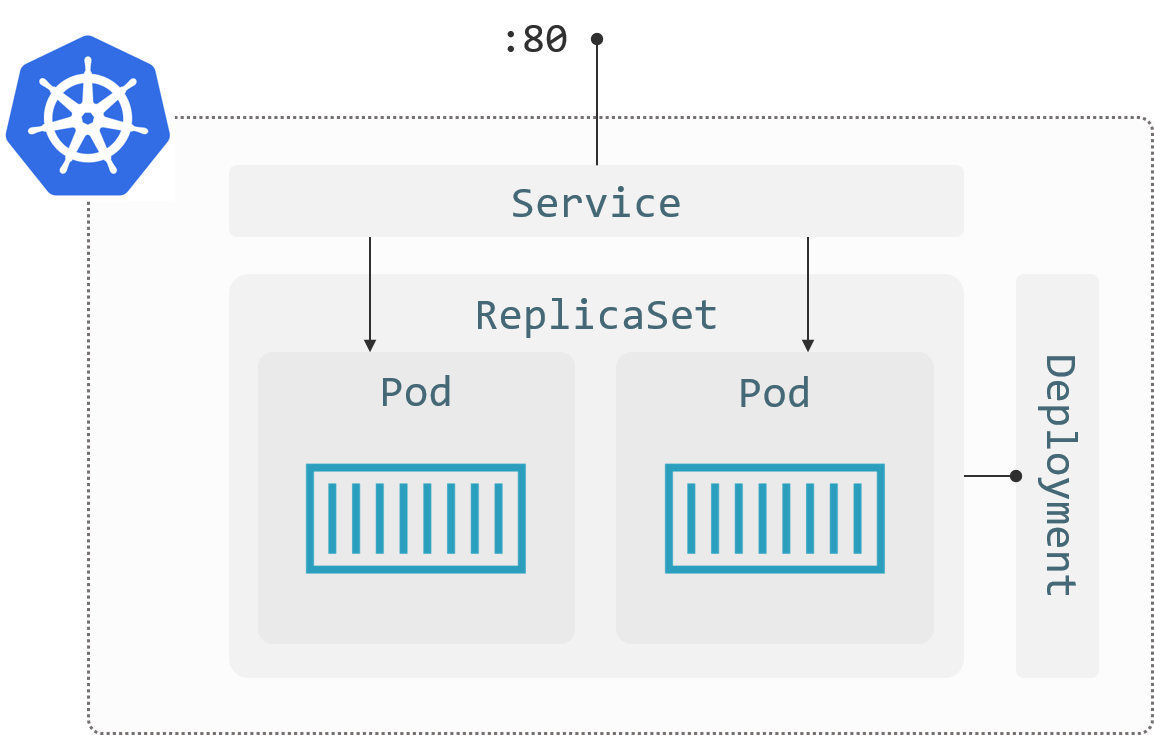

My Pi web application could be modeled fairly simply using basic Kubernetes features and deployed to AKS or EKS. I won’t go into detail on the components here, but this is all standard Kubernetes:

That gives me scale and failover with multiple containers running my app, plus load balancing between them. I could extend the model to separate the configuration of my app from the container image, meaning I can use the same image in every environment and just apply the settings I need from the cluster.

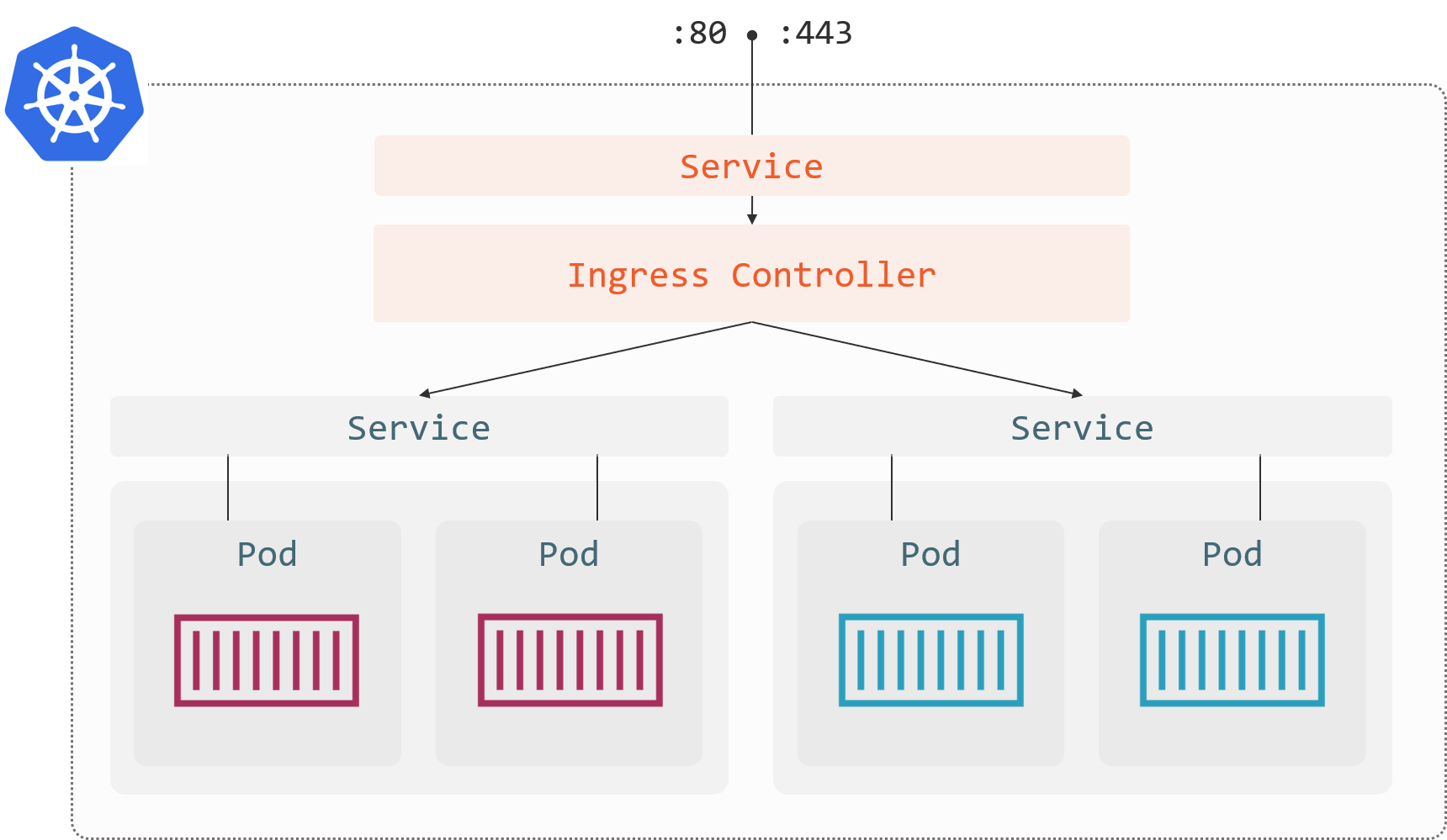

Then I might want to add another app in the same cluster, but keep a single IP address and route traffic based on the web domain in the HTTP request. That’s easy to do with Kubernetes using ingress—this simplified diagram shows the main pieces:

The ingress controller gives me routing for several apps, plus handy extras like response caching and SSL termination, so I can keep my app components simple and layer on those features at a higher level. If I decided to break my Pi app into multiple microservices, I could deploy a Service Mesh like Istio into my cluster and get security, reliability and observability between my components without needing to code for all that. And that would give me blue-green and canary deployment functionality too.

Managing Apps on Kubernetes with Istio on Pluralsight shows you the power of the Service Mesh.

That roadmap is possible because Kubernetes is such a well used and extensible system. Pretty much anything you need to do has already been done and is easy to replicate in your own environment, because of the standard Kubernetes API. So yes, Kubernetes is complicated, but the complexity is the entry price for getting a world-class application platform that you can use in any cloud and in the datacenter.

Betting on Kubernetes

I work as a freelance consultant, and in two recent projects, I recommended deploying to cloud container services rather than Kubernetes. In both projects the recommendation was based on available resources. In one case, the team didn’t have Kubernetes experience and it was too steep a learning curve for a relatively small production footprint. In the other case it was a technical resource issue (a Windows app which needed features not yet supported by Kubernetes).

Both of those projects are now planning a migration to Kubernetes. In the first case, the client got a new customer in Asia who wanted to use the Tencent cloud rather than Azure. Tencent has its own managed container service, which is roughly equivalent to ACI, but would mean a migration project and then two separate deployment models to maintain. Instead, we modeled the app in Kubernetes and deployed to Tencent’s Kubernetes service, with a plan to move the existing ACI deployment to AKS and have a single model for both clouds.

The second project is dependent on GPU support for Windows containers in Kubernetes. While we’re waiting, the rest of the stack has been moved to AKS so that the client can run multiple apps on a single cluster, getting higher density at lower cost than running in separate ACI instances.

In both cases, we used the right container platform for the problem we were solving at the time. Managed container services are great for production deployments without the overhead of Kubernetes, but when you’re designing your solutions, be sure to keep them flexible: You may find a move to Kubernetes coming at you sooner or later.